官方公众号

官方公众号

商务合作

商务合作

资源放送

↓ 扫一扫 ↓

领取OpenStack安装教程录屏资料

前文指路:

http://thinkmo.com.cn/details/id/340.html

4.5、安装Neutron

4.5.1、安装、配置控制节点

创建数据库:

#进入数据库 [root@controller /]# mysql -u root -p #创建数据库 MariaDB [(none)] CREATE DATABASE neutron; #授权 MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' \ IDENTIFIED BY 'NEUTRON_DBPASS'; MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' \ IDENTIFIED BY 'NEUTRON_DBPASS';

获取管理员的CLI命令:

[root@controller /]# . admin-openrc

创建服务凭证:

#创建Neutron用户

[root@controller /]# openstack user create --domain default --password-prompt neutron

#此处使用默认密码:NEUTRON_PASS

User Password:

Repeat User Password:

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | fdb0f541e28141719b6a43c8944bf1fb |

| name | neutron |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

#添加Neutron用户的juese

[root@controller /]# openstack role add --project service --user neutron admin

#创建Neutron服务实例

[root@controller /]# openstack service create --name neutron \

--description "OpenStack Networking" network

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Networking |

| enabled | True |

| id | f71529314dab4a4d8eca427e701d209e |

| name | neutron |

| type | network |

+-------------+----------------------------------+创建网络服务API端点:

[root@controller /]# openstack endpoint create --region RegionOne \ network public http://controller:9696 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | 85d80a6d02fc4b7683f611d7fc1493a3 | | interface | public | | region | RegionOne | | region_id | RegionOne | | service_id | f71529314dab4a4d8eca427e701d209e | | service_name | neutron | | service_type | network | | url | http://controller:9696 | +--------------+----------------------------------+ [root@controller /]# openstack endpoint create --region RegionOne \ network internal http://controller:9696 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | 09753b537ac74422a68d2d791cf3714f | | interface | internal | | region | RegionOne | | region_id | RegionOne | | service_id | f71529314dab4a4d8eca427e701d209e | | service_name | neutron | | service_type | network | | url | http://controller:9696 | +--------------+----------------------------------+ [root@controller /]# openstack endpoint create --region RegionOne \ network admin http://controller:9696 +--------------+----------------------------------+ | Field | Value | +--------------+----------------------------------+ | enabled | True | | id | 1ee14289c9374dffb5db92a5c112fc4e | | interface | admin | | region | RegionOne | | region_id | RegionOne | | service_id | f71529314dab4a4d8eca427e701d209e | | service_name | neutron | | service_type | network | | url | http://controller:9696 | +--------------+----------------------------------+

配置网络:

此处有两种网络可选:provider networks、self-service networks。本次使用self-service networks。

安装组件:

[root@controller /]# yum install openstack-neutron openstack-neutron-ml2 \ openstack-neutron-linuxbridge ebtables

配置neutron.conf:

[root@controller /]# vim /etc/neutron/neutron.conf [database] connection = mysql+pymysql://neutron:NEUTRON_DBPASS@controller/neutron [DEFAULT] core_plugin = ml2 service_plugins = router allow_overlapping_ips = true [DEFAULT] transport_url = rabbit://openstack:RABBIT_PASS@controller auth_strategy = keystone [keystone_authtoken] auth_uri = http://controller:5000 auth_url = http://controller:35357 memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = neutron password = NEUTRON_PASS [DEFAULT] notify_nova_on_port_status_changes = true notify_nova_on_port_data_changes = true [nova] auth_url = http://controller:35357 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = nova password = NOVA_PASS [oslo_concurrency] lock_path = /var/lib/neutron/tmp

配置ml2_conf.ini。

配置模块化第 2 层 (ML2) 插件:

[root@controller /]# vim /etc/neutron/plugins/ml2/ml2_conf.ini [ml2] type_drivers = flat,vlan,vxlan tenant_network_types = vxlan mechanism_drivers = linuxbridge,l2population extension_drivers = port_security [ml2_type_flat] flat_networks = provider [ml2_type_vxlan] vni_ranges = 1:1000 [securitygroup] enable_ipset = true

配置linuxbridge_agent.ini。

配置 Linux 网桥代理:

#配置文件 [root@controller /]# vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini [linux_bridge] #PROVIDER_INTERFACE_NAME:替换为本机网卡名,eg:ens33 physical_interface_mappings = provider:PROVIDER_INTERFACE_NAME [vxlan] enable_vxlan = true #OVERLAY_INTERFACE_IP_ADDRESS:替换为本机网卡IP local_ip = OVERLAY_INTERFACE_IP_ADDRESS l2_population = true [securitygroup] enable_security_group = true firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver #验证linux系统内核是否支持网桥筛选器,下面的参数输出值均为1,说明支持。 [root@controller /]# sysctl net.bridge.bridge-nf-call-iptables net.bridge.bridge-nf-call-iptables = 1 [root@controller /]# sysctl net.bridge.bridge-nf-call-ip6tables net.bridge.bridge-nf-call-ip6tables = 1 #(可选)若上述的参数不为1,则需要手动配置/etc/sysctl.conf [root@controller /]# vim /etc/sysctl.conf net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1

配置第三层代理。

为自助服务虚拟网络提供路由和 NAT 服务:

[root@controller /]# vim /etc/neutron/dhcp_agent.ini [DEFAULT] interface_driver = linuxbridge dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq enable_isolated_metadata = true

配置DHCP代理。

为虚拟网络提供 DHCP 服务:

[root@controller /]# vim /etc/neutron/dhcp_agent.ini [DEFAULT] interface_driver = linuxbridge dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq enable_isolated_metadata = true

配置元数据代理:

[root@controller /]# vim /etc/neutron/metadata_agent.ini [DEFAULT] nova_metadata_host = controller metadata_proxy_shared_secret = METADATA_SECRET

配置Compute服务使用Networking服务。

即:配置Nova服务使用Neutron服务。

[root@controller /]# vim /etc/nova/nova.conf [neutron] url = http://controller:9696 auth_url = http://controller:35357 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = neutron password = NEUTRON_PASS service_metadata_proxy = true metadata_proxy_shared_secret = METADATA_SECRET

配置环境:

[root@controller /]# vim /etc/neutron/neutron.conf #(注意:使用all in one的安装方式,此处的数据库不可以注释,使用所有节点均在同一台主机中,注释掉会导致网络组件无法使用) #注释[database]的connection,此处不需要使用数据库 [database] #connection=mysql+pymysql://neutron:NEUTRON_DBPASS@controller/neutron [DEFAULT] transport_url = rabbit://openstack:RABBIT_PASS@controller auth_strategy = keystone [keystone_authtoken] auth_uri = http://controller:5000 auth_url = http://controller:35357 memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = neutron password = NEUTRON_PASS [oslo_concurrency] lock_path = /var/lib/neutron/tmp

4.5.2、安装、配置计算节点

安装组件:

[root@controller /]# yum install openstack-neutron-linuxbridge ebtables ipset

(该步骤可省略)配置通用组件。

该步骤可省略,因为all in one安装方式,计算节点和控制节点在同一台主机中,4.5.1中已经配置了neutron.conf文件。因此该步骤可省略。

[root@controller /]# vim /etc/neutron/neutron.conf #(注意:使用all in one的安装方式,此处的数据库不可以注释,使用所有节点均在同一台主机中,注释掉会导致网络组件无法使用) #注释[database]的connection,此处不需要使用数据库 [database] #connection=mysql+pymysql://neutron:NEUTRON_DBPASS@controller/neutron [DEFAULT] transport_url = rabbit://openstack:RABBIT_PASS@controller auth_strategy = keystone [keystone_authtoken] auth_uri = http://controller:5000 auth_url = http://controller:35357 memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = neutron password = NEUTRON_PASS [oslo_concurrency] lock_path = /var/lib/neutron/tmp

(该步骤可省略)配置网络。

该步骤可省略,因为all in one安装方式,计算节点和控制节点在同一台主机中,4.5.1中已经配置了linuxbridge_agent.ini文件。因此该步骤可省略。

[root@controller /]# . admin-openrc #列出加载的扩展以验证neutron-server进程是否成功启动 [root@controller /]# openstack extension list --network +---------------------------+---------------------------+----------------------------+ | Name | Alias | Description | +---------------------------+---------------------------+----------------------------+ | Default Subnetpools | default-subnetpools | Provides ability to mark | | | | and use a subnetpool as | | | | the default | | Availability Zone | availability_zone | The availability zone | ...... ......

验证网络是否配置好:

[root@controller /]# . admin-openrc #列出加载的扩展以验证neutron-server进程是否成功启动 [root@controller /]# openstack extension list --network +---------------------------+---------------------------+----------------------------+ | Name | Alias | Description | +---------------------------+---------------------------+----------------------------+ | Default Subnetpools | default-subnetpools | Provides ability to mark | | | | and use a subnetpool as | | | | the default | | Availability Zone | availability_zone | The availability zone | ...... ......

(该步骤可省略)配置Compute服务使用Networking服务。

即:配置Nova服务使用Neutron服务。

该步骤可省略,因为all in one安装方式,计算节点和控制节点在同一台主机中,4.5.1中已经配置了nova.conf 文件。因此该步骤可省略。

[root@controller /]# vim /etc/nova/nova.conf [neutron] url = http://controller:9696 auth_url = http://controller:35357 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = neutron password = NEUTRON_PASS

配置环境:

重启、开机自启动:

[root@controller /]# systemctl restart openstack-nova-compute.service [root@controller /]# systemctl enable neutron-linuxbridge-agent.service [root@controller /]# systemctl start neutron-linuxbridge-agent.service

4.6、安装Horizon

4.6.1、安装Horizon步骤

该组件选用手动安装的方式,不使用source的方式安装。

安装dashboard软件:

[root@controller /]# yum install openstack-dashboard

配置local_settings:

[root@controller /]# vim /etc/openstack-dashboard/local_settings

#配置主机名

OPENSTACK_HOST = "controller"

#'*' 允许所有主机访问

ALLOWED_HOSTS = ['*', 'two.example.com']

#新增SESSION_ENGINE

SESSION_ENGINE = 'django.contrib.sessions.backends.cache'

#修改原有的CACHES,为下面的部分

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': 'controller:11211',

}

}

OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

#修改原有的OPENSTACK_API_VERSIONS为:

OPENSTACK_API_VERSIONS = {

"identity": 3,

"image": 2,

"volume": 2,

}

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "Default"

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user"

#(不需要设置)只有在选择网络为provider networks时才需要设置该步骤

OPENSTACK_NEUTRON_NETWORK = {

...

'enable_router': False,

'enable_quotas': False,

'enable_distributed_router': False,

'enable_ha_router': False,

'enable_lb': False,

'enable_firewall': False,

'enable_vpn': False,

'enable_fip_topology_check': False,

}

#(可选)设置时区

TIME_ZONE = "TIME_ZONE"配置openstack-dashboard.conf:

[root@controller /]# vim /etc/httpd/conf.d/openstack-dashboard.conf

#新增

WSGIApplicationGroup %{GLOBAL}配置环境:

#重启httpd、memcached服务 [root@controller /]# systemctl restart httpd.service memcached.service

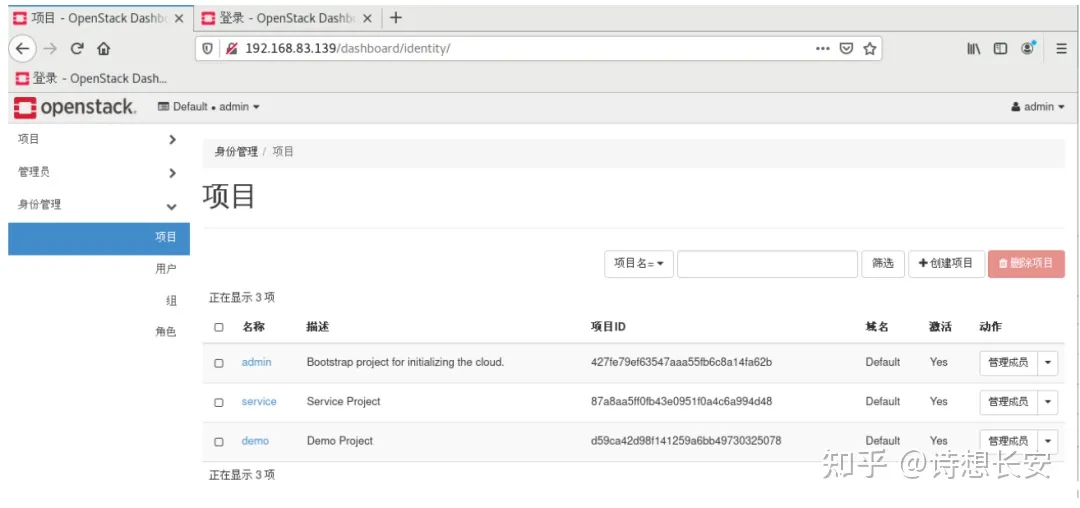

4.6.2、验证是否成功安装

在浏览器,登录下面的地址:

#方式1: http://controller/dashboard #方式2: http://本机网卡IP/dashboard

登录选项:

domain:default;

username:admin;

password:ADMIN_PASS。

结果:

4.7、安装Cinder

4.7.1、安装、配置存储节点

安装LVM:

[root@controller /]# pvcreate /dev/sdb Physical volume "/dev/sdb" successfully created

创建LVM物理卷。

注意:此处的sdb,表示的是第二块硬盘。(同理,sda表示第一块硬盘)

[root@controller /]# pvcreate /dev/sdb Physical volume "/dev/sdb" successfully created

创建 LVM 卷组cinder-volumes:

[root@controller /]# vgcreate cinder-volumes /dev/sdb Volume group "cinder-volumes" successfully created

配置lvm.conf:

[root@controller /]# vim /etc/lvm/lvm.conf #设置过滤器,a:接受。r:拒绝 #下面的filter,只需要选择一个进行配置即可。 #存储节点使用LVM (本教程使用这个) filter = [ "a/sda/", "a/sdb/", "r/.*/"] #计算节点使用LVM filter = [ "a/sda/", "r/.*/"]

安装cinder组件:

[root@controller /]# yum install openstack-cinder targetcli python-keystone

配置cinder.conf:

[root@controller /]# vim /etc/cinder/cinder.conf [database] connection = mysql+pymysql://cinder:CINDER_DBPASS@controller/cinder [DEFAULT] transport_url = rabbit://openstack:RABBIT_PASS@controller auth_strategy = keystone [keystone_authtoken] auth_uri = http://controller:5000 auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = password project_domain_id = default user_domain_id = default project_name = service username = cinder password = CINDER_PASS [DEFAULT] #配置为本机的网卡IP my_ip = MANAGEMENT_INTERFACE_IP_ADDRESS [lvm] volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver volume_group = cinder-volumes iscsi_protocol = iscsi iscsi_helper = lioadm [DEFAULT] enabled_backends = lvm glance_api_servers = http://controller:9292 [oslo_concurrency] lock_path = /var/lib/cinder/tmp

配置环境:

[root@controller /]# systemctl enable openstack-cinder-volume.service target.service [root@controller /]# systemctl start openstack-cinder-volume.service target.service

4.7.2、安装、配置控制节点

在控制器节点上安装和配置块存储服务。

创建数据库:

#进入数据库 [root@controller /]# mysql -u root -p #创建cinder数据库 MariaDB [(none)]> CREATE DATABASE cinder; #数据库授权 MariaDB [(none)]> GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'localhost' \ IDENTIFIED BY 'CINDER_DBPASS'; MariaDB [(none)]> GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' \ IDENTIFIED BY 'CINDER_DBPASS';

创建服务凭证:

#获取管理员的CLI命令

[root@controller /]# . admin-openrc

#创建cinde用户

[root@controller /]#openstack user create --domain default --password-prompt cinder

#此处使用默认密码:CINDER_PASS

User Password:

Repeat User Password:

+---------------------+----------------------------------+

| Field | Value |

+---------------------+----------------------------------+

| domain_id | default |

| enabled | True |

| id | 9d7e33de3e1a498390353819bc7d245d |

| name | cinder |

| options | {} |

| password_expires_at | None |

+---------------------+----------------------------------+

#为cinder用户添角色信息

[root@controller /]# openstack role add --project service --user cinder admin

#创建cinder服务实例

#cinderv2

[root@controller /]# openstack service create --name cinderv2 \

--description "OpenStack Block Storage" volumev2

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Block Storage |

| enabled | True |

| id | eb9fd245bdbc414695952e93f29fe3ac |

| name | cinderv2 |

| type | volumev2 |

+-------------+----------------------------------+

#cinderv3

[root@controller /]# openstack service create --name cinderv3 \

--description "OpenStack Block Storage" volumev3

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | OpenStack Block Storage |

| enabled | True |

| id | ab3bbbef780845a1a283490d281e7fda |

| name | cinderv3 |

| type | volumev3 |

+-------------+----------------------------------+创建块存储服务API端点。

创建v2版本的端点:

[root@controller /]# openstack endpoint create --region RegionOne \ volumev2 public http://controller:8776/v2/%\(project_id\)s +--------------+------------------------------------------+ | Field | Value | +--------------+------------------------------------------+ | enabled | True | | id | 513e73819e14460fb904163f41ef3759 | | interface | public | | region | RegionOne | | region_id | RegionOne | | service_id | eb9fd245bdbc414695952e93f29fe3ac | | service_name | cinderv2 | | service_type | volumev2 | | url | http://controller:8776/v2/%(project_id)s | +--------------+------------------------------------------+ [root@controller /]# openstack endpoint create --region RegionOne \ volumev2 internal http://controller:8776/v2/%\(project_id\)s +--------------+------------------------------------------+ | Field | Value | +--------------+------------------------------------------+ | enabled | True | | id | 6436a8a23d014cfdb69c586eff146a32 | | interface | internal | | region | RegionOne | | region_id | RegionOne | | service_id | eb9fd245bdbc414695952e93f29fe3ac | | service_name | cinderv2 | | service_type | volumev2 | | url | http://controller:8776/v2/%(project_id)s | +--------------+------------------------------------------+ [root@controller /]# openstack endpoint create --region RegionOne \ volumev2 admin http://controller:8776/v2/%\(project_id\)s +--------------+------------------------------------------+ | Field | Value | +--------------+------------------------------------------+ | enabled | True | | id | e652cf84dd334f359ae9b045a2c91d96 | | interface | admin | | region | RegionOne | | region_id | RegionOne | | service_id | eb9fd245bdbc414695952e93f29fe3ac | | service_name | cinderv2 | | service_type | volumev2 | | url | http://controller:8776/v2/%(project_id)s | +--------------+------------------------------------------+

创建v3版本的端点:

[root@controller /]# openstack endpoint create --region RegionOne \ volumev3 public http://controller:8776/v3/%\(project_id\)s +--------------+------------------------------------------+ | Field | Value | +--------------+------------------------------------------+ | enabled | True | | id | 03fa2c90153546c295bf30ca86b1344b | | interface | public | | region | RegionOne | | region_id | RegionOne | | service_id | ab3bbbef780845a1a283490d281e7fda | | service_name | cinderv3 | | service_type | volumev3 | | url | http://controller:8776/v3/%(project_id)s | +--------------+------------------------------------------+ [root@controller /]# openstack endpoint create --region RegionOne \ volumev3 internal http://controller:8776/v3/%\(project_id\)s +--------------+------------------------------------------+ | Field | Value | +--------------+------------------------------------------+ | enabled | True | | id | 94f684395d1b41068c70e4ecb11364b2 | | interface | internal | | region | RegionOne | | region_id | RegionOne | | service_id | ab3bbbef780845a1a283490d281e7fda | | service_name | cinderv3 | | service_type | volumev3 | | url | http://controller:8776/v3/%(project_id)s | +--------------+------------------------------------------+ [root@controller /]# openstack endpoint create --region RegionOne \ volumev3 admin http://controller:8776/v3/%\(project_id\)s +--------------+------------------------------------------+ | Field | Value | +--------------+------------------------------------------+ | enabled | True | | id | 4511c28a0f9840c78bacb25f10f62c98 | | interface | admin | | region | RegionOne | | region_id | RegionOne | | service_id | ab3bbbef780845a1a283490d281e7fda | | service_name | cinderv3 | | service_type | volumev3 | | url | http://controller:8776/v3/%(project_id)s | +--------------+------------------------------------------+

安装cinder组件:

[root@controller /]# yum install openstack-cinder

配置cinder.conf:

[root@controller /]# vim /etc/cinder/cinder.conf [database] connection = mysql+pymysql://cinder:CINDER_DBPASS@controller/cinder [DEFAULT] transport_url = rabbit://openstack:RABBIT_PASS@controller auth_strategy = keystone my_ip = 10.0.0.11 [keystone_authtoken] auth_uri = http://controller:5000 auth_url = http://controller:5000 memcached_servers = controller:11211 auth_type = password project_domain_id = default user_domain_id = default project_name = service username = cinder password = CINDER_PASS [oslo_concurrency] lock_path = /var/lib/cinder/tmp

填充数据库:

[root@controller /]# su -s /bin/sh -c "cinder-manage db sync" cinder

配置Nova使用块存储:

[root@controller /]# vim /etc/nova/nova.conf

配置环境:

[root@controller /]# systemctl restart openstack-nova-api.service [root@controller /]# systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service [root@controller /]# systemctl start openstack-cinder-api.service openstack-cinder-scheduler.service

4.7.3、(可选)安装、配置备份服务

本教程没有安装swift组件,因此可省略此步骤。

安装cinder:

[root@controller /]# yum install openstack-cinder

配置cinder.conf:

[root@controller /]# /etc/cinder/cinder.conf [DEFAULT] backup_driver = cinder.backup.drivers.swift #替换SWIFT_URL为;为对象存储服务的 URL #可通过命令查看具体的替换数值:openstack catalog show object-store backup_swift_url = SWIFT_URL

配置环境:

[root@controller /]# systemctl enable openstack-cinder-backup.service [root@controller /]# systemctl start openstack-cinder-backup.service

4.7.4、验证是否成功安装

[root@controller /]# . admin-openrc [root@controller /]# openstack volume service list #此处未安装swift组件,因此只有存储节点、控制节点 +------------------+------------+------+---------+-------+----------------------------+ | Binary | Host | Zone | Status | State | Updated_at | +------------------+------------+------+---------+-------+----------------------------+ | cinder-scheduler | controller | nova | enabled | up | 2016-09-30T02:27:41.000000 | | cinder-volume | block@lvm | nova | enabled | up | 2016-09-30T02:27:46.000000 | +------------------+------------+------+---------+-------+----------------------------+

1、安装keystone后,执行命令:openstack domain create --description “An Example Domain” example。

出现错误:

Failed to discover available identity versions when contacting http://controller:5000/v3. Attempting to parse version from URL.

Unable to establish connection to http://controller:5000/v3/auth/tokens: HTTPConnectionPool(host='controller', port=5000): Max retries exceeded with url: /v3/auth/tokens (Caused by NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7fa20bba02b0>: Failed to establish a new connection: [Errno -3] Temporary failure in name resolution',))解决方式:

关闭SELinux,然后使用命令setnforce 0(或者重启系统)。

2、配置网卡时,需要注意的事项

配置网络时,需要先使用dhcp,获取虚拟机网卡ip,然后再使用static的方式,配置网卡ip。如果直接使用dhcp的方式,可能会导致该ip无法使用,无法ping通。

3.安装OpenStack各个组件时,使用命令:yum install xxxx -y。

出现错误:

Error downloading packages: python-dogpile-cache-0.6.2-1.el7.noarch: [Errno 256] No more mirrors to try. python-keyring-5.7.1-1.el7.noarch: [Errno 256] No more mirrors to try. python-dogpile-core-0.4.1-2.el7.noarch: [Errno 256] No more mirrors to try. python-cmd2-0.6.8-8.el7.noarch: [Errno 256] No more mirrors to try.

解决方式:

(1)方式1:再次执行安装命令 yum install python-openstackclient -y。

(2)方式2:yum update =》yum install python-openstackclient -y若还是出现错误:Error downloading packages: lttng-ust-2.10.0-1.el7.x86_64: [Errno 256] No more mirrors to try。

使用yum clean cache、yum makecache,然后再次进行yum update。

4、horizon登录注意事项

地址:http://controller/dashboard。

不行的话,换成:http://controller对用的IP地址/dashboard。

登录填写的资料:

domain:default;

username:admin;

password:ADMIN_PASS。

5、禁用SELinux

**否则会出现错误:**the request you have made requiresauthentication(HTTPConnection 401)。

解决方式:

[root@controller /]# vim /etc/selinux/config SELINUX=disabled SELINUXTYPE=targeted

然后setnforce 0(或者重启系统)。

6、VMWare挂载磁盘的注意事项

查看挂载:

[root@controller /]# fdisk -l

若新增的磁盘未挂载上,处理方式:

#1、查看当前有的磁盘文件: [root@controller /]# cd /sys/class/scsi_host/ #2、获取当前目录下的所有文件名 [root@controller /]# ls host0 host1 host2 #3、逐个执行上面文件中出现的文件 [root@controller /]# echo "- - -" > /sys/class/scsi_host/host0/scan [root@controller /]# echo "- - -" > /sys/class/scsi_host/host1/scan [root@controller /]# echo "- - -" > /sys/class/scsi_host/host2/scan

7、重启虚拟机后,无法使用openstack命令。

原因:

因为使用export设置环境变量,因此该环境变量只限定于当前执行命令的终端使用。系统关机、开启新的终端均无法使用这个环境变量。

解决方式:需要运行源文件命令,添加环境变量。

[root@controller /]# . admin-openrc

#admin-openrc文件中的内容 [root@controller /]# vim admin-openrc export OS_PROJECT_DOMAIN_NAME=Default export OS_USER_DOMAIN_NAME=Default export OS_PROJECT_NAME=admin export OS_USERNAME=admin export OS_PASSWORD=ADMIN_PASS export OS_AUTH_URL=http://controller:5000/v3 export OS_IDENTITY_API_VERSION=3 export OS_IMAGE_API_VERSION=2

8、执行openstack volume service list,cinder-volume运行失败。

原因:若存储节点在操作系统磁盘上使用LVM,则需要将关联的设备添加到过滤器中。

解决方式:配置存储节点时,需要挂载相应的卷。

#修改配置文件:/etc/lvm/lvm.conf

[root@controller /]# vim /etc/lvm/lvm.conf

devices {

...

filter = [ "a/sda/", "a/sdb/", "r/.*/"]

}9、Nova无法启动

原因:防火墙阻止访问5672端口。

解决方式:

#查看nova-comput日志:发现端口未启用 cat /var/log/nova/nova-compute.log #关闭防火墙 systemctl stop firewalld systemctl disable firewalld #重启nova服务 systemctl start libvirtd.service openstack-nova-compute.service

(已完结)

推荐阅读